Humour, comics, tech, law, software, reviews, essays, articles and HOWTOs intermingled with random philosophy now and then

Filed under:

Tutorials and HOWTOs by

Hari

Posted on Mon, Apr 6, 2020 at 19:12 IST (last updated: Thu, Apr 9, 2020 @ 18:09 IST)

In the previous part, I had introduced the grammar of our Toy calculator language. If you haven't already, read part 1 and part 2 first. Here is a summary of the grammar rules portion:

toycalc : (statement TERMINATOR)+;

statement : (OPERATION EXPR | PRINT STRING | GETVALUE);

Basically the program is a series of statements, which can be one of operation followed by an expression, or a print statement or a statement to get and print the current calculator value.

Here is the lexer definition which follows the above parsing, analyzed line by line:

TERMINATOR : ';';

The above is a token. Tokens start with upper-case characters in ANTLR grammar files and for readability the entire token is made upper-case as convention. Tokens are basically lexing rules - how to read characters from a stream of input and organize a bunch of characters as tokens. Mostly lexer rules are (or should be) simple and unambiguous, because problems in tokenizing can lead to frustrating problems with parsing the grammar.

The above rule defines what is our statement terminator (a literal semi-colon, but we could use any other character also - it is our grammar after all!). Note that the terminator is used in the first rule of our grammar following a statement.

OPERATION : 'SETVALUE' | 'ADD' | 'SUB' | 'MUL' | 'DIV' ;

The next one defines what is an operation. Note that we use string literals (we could also use symbolic constants, but for simplicity sake I have used string literals). This is simple: we define a set of alternatives, i.e. operation can be either of setting a value, add, subtract, multiply or divide. In our Toy Calculator each operation is followed by only one number (integer or decimal number, represented by the token EXPR), and the operation is applied on the calculator's value, like say, the statement ADD 23.25; will add 23.25 to the current calculator value.

PRINT : 'PRINT';

GETVALUE : 'GETVALUE';

These are self-explanatory. PRINT is a token for the literal word PRINT from the input stream and GETVALUE is a token for the literal match GETVALUE. As already defined conceptually, the first should print a string enclosed in double quotes and the next one should simply display the current calculator value.

EXPR : INTEGER | FLOAT;

The above defines a token as either of two tokens, i.e. INTEGER or FLOAT. This makes it easy to treat a class of tokens as one, or if required individually also. In this case, the token name EXPR might as well be NUMBER, but I chose EXPR as a token name. You can always change it if you wish.

STRING : '"'(.*?)'"';

This is defining what is a string in our language. Basically it is a very simple rule (most real-world applications have escaping rules for strings, which makes it much more non-trivial) but what this rule says is that match the first double quote, and then read any character 0 or more times and match the next double quote. Note that the question mark makes this match non-greedy, i.e. the parser will stop at the very next double quote and not keep reading until the longest possible match. This is an important thing to note, because if the match is greedy, our grammar will fail, because the lexer will hunt for the longest match between two double-quotes.

Note: Our definition of STRING has severe limitations in a production use scenario - you cannot have double quotes inside the string as our lexer will stop at the first double quote character it meets. You cannot have any escape sequences. Also all whitespaces are treated literally (which may or may not meet your requirements). But for our basic Toy calculator, all we want is a way to display a short message to the user and so this should do the trick.

INTEGER : [0-9]+ | '-'[0-9]+;

FLOAT : [0-9]+'.'[0-9]+ | '-'[0-9]+'.'[0-9]+;

The next two tokens define an integer/float respectively. Here the form [0-9]+ defines a range, i.e. any digit between 0 and 9 repeated one or more times. Note the alternative i.e. a minus character before the [0-9]+. An integer can be positive or negative.

A FLOAT is similarly defined, except that, there should be a decimal point between digits, i.e. one or more digits followed by a decimal point and followed by one or more digits. The alternative for a negative number.

In this case, you might have noticed we repeat the pattern [0-9]+ so many times but in itself is not a token to be recognized by the parser. In such cases, you can use fragments, i.e. symbolic constants that are not parsed as tokens but are meant to be used to build tokens. Symbolic constants are defined with a keyword fragment in ANTLR like this:

fragment DIGIT [0-9]

and we could replace the corresponding lexer rules with:

INTEGER : DIGIT+ | '-' DIGIT+;

FLOAT : DIGIT+'.'DIGIT+ | '-'DIGIT+'.'DIGIT+;

However, I have chosen not to use it in my grammar. But for larger and less trivial grammars, using fragments will arguably make the grammar more readable.

Finally, the last two lines deserve special mention.

COMMENT : '/*'(.*?)'*/' -> skip;

WS : [ \t\r\n]+ -> skip ;

The above defines two special lexer rules that cannot be used in parsing. Basically the -> skip instructs the lexer to discard these tokens. Here, a comment is defined as any content that starts with /* and ends with */ i.e. basically C-style comments. Again, like strings, we are using non-greedy matching, so as to catch the first match of the comment terminator */ properly.

The next rule discards one or more whitespaces between other characters in the stream, i.e. spaces, tabs, carriage return and newline characters. This will have an interesting effect in our grammar, since we basically state that all whitespaces are meaningless to the parser. Basically, with such an approach, we can combine multiple (or even all) statements in a singe line with the statement separator character distinguishing individual statements. Also, we need not have a space between two distinct tokens, i.e. a statement like ADD 10 can as well be written as ADD10 as per our grammar, since 'ADD' matches one token rule and 10 matches another token rule unambiguously. But more on that later.

One very important point about lexing rules. If there are two or more lexing rules that are ambiguous i.e. matching one particular pattern, ANTLR will give prominence to the first rule. Hence the ordering of rules is important. A more general rule, if it appears above a more specific rule that matches a subset of the general rule, will always win. Meaning the specific rule will never be matched. For example:

IDENTIFIER : [a-z]+;

PRINT : 'print';

In the above, the PRINT token will never be generated by the lexer, as the previous token IDENTIFIER defined as any combination of one or more lower case characters will match the word 'print' (note the case sensitivity).

In the next part, we will generate the actual parser code for our grammar using ANLTR and do something useful with the generated classes. This is where all the magic happens.

Filed under:

Tutorials and HOWTOs by

Hari

Posted on Sun, Apr 5, 2020 at 14:57 IST (last updated: Thu, Apr 9, 2020 @ 17:56 IST)

In the second part of this series (the first part here), we will set up NetBeans/Maven to work with ANTLR, and also build our first grammar. I will assume that you have installed Java/NetBeans and also created a new empty Maven project. Hereinafter all references to subfolders will be relative to this project folder.

Setting up Maven

Add the following lines to pom.xml found in Project Files, under the Projects sidebar in NetBeans. This will pull in the required dependencies to build the ANTLR project as well as set up automatic generation of Java classes from our ANTLR grammar file.

<dependencies>

<dependency>

<groupId>org.antlr</groupId>

<artifactId>antlr4-runtime</artifactId>

<version>4.7.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.antlr</groupId>

<artifactId>antlr4-maven-plugin</artifactId>

<version>4.7.2</version>

<executions>

<execution>

<goals>

<goal>antlr4</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

This will pull in the ANTLR4 dependencies when building the ANTLR project from NetBeans.

Our Grammar

As per the specifications set out in the earlier part of this series, it is now time to define our grammar. If you haven't already, please read the previous part to understand the conceptualization of the grammar. That will make it easier to follow the remaining part of this series.

First under your project folder, create a new folder antlr4 under src/main. This is also a good time to get familiarized with the EBNF notation which is the notation used by ANTLR in describing grammars.

Create a new grammar file ToyCalc.g4 inside the src/main/antlr4 directory under the full package subfolder i.e in our case org/harishankar/toycalc. Thus the grammar file will reside in src/main/antlr4/org/harishankar/toycalc. By putting the grammar definitions here, the maven ANTLR plugin will automatically generate the sources for the parser in the generated sources folder of the project.

ToyCalc.g4 is the file where we will define our grammar (both lexical analysis as well as parsing logic in this case, but it is possible in ANTLR to separate them into separate files).

This is our basic grammar.

grammar ToyCalc;

toycalc : (statement TERMINATOR)+;

statement : (OPERATION EXPR | PRINT STRING | GETVALUE);

TERMINATOR : ';';

OPERATION : 'SETVALUE' | 'ADD' | 'SUB' | 'MUL' | 'DIV' ;

PRINT : 'PRINT';

GETVALUE : 'GETVALUE';

EXPR : INTEGER | FLOAT;

STRING : '"'(.*?)'"';

INTEGER : [0-9]+ | '-'[0-9]+;

FLOAT : [0-9]+'.'[0-9]+ | '-'[0-9]+'.'[0-9]+;

COMMENT : '/*'(.*?)'*/' -> skip;

WS : [ \t\r\n]+ -> skip ;

Each lowercase identifier is a parser rule and each uppercase identifier is a lexical rule. The lexical rules are placed below the parsing rules. The first line defines that our file is ANTLR grammar file (combined lexer and parsing rules) with the name ToyCalc.

Let us analyze the parsing rules first:

toycalc : (statement TERMINATOR)+;

statement : (OPERATION EXPR | PRINT STRING | GETVALUE);

The first is the entry point of our grammar which defines that main grammar is basically one or more statements followed by a terminator token (defined by our lexer). The + sign stands for one or more.

The next line defines what a statement is in our language. The pipe symbol is used to define a set of alternative constructs that can apply to the rule. In this case, the rule states that a statement can be an "OPERATION" followed by an "EXPR" (expression, which in turn can be a positive or negative integer or decimal number). A statement can also be a "PRINT" statement followed by a "STRING" to be printed (which is basically defined as any literal string enclosed in double quotes), or a simple "GETVALUE" statement which doesn't need any other token following it.

Recall the conceptualization of our toy language. A statement can consist of either an operation (one of setting/resetting the calculator value, adding, subtracting, multiplying or dividing); a print statement to display a message; or a statement to display the current value of the calculator. This distinction is rather important, because the way we structure the grammar will influence how we implement the logic. That is why it is important to have structured our grammar well before putting down the code. In this case, the grammar is quite trivial so it may not be so important to have a mental map of it before-hand. But still it is a good idea to map out the entire grammar before defining it in code.

Note that each of the above uppercase identifiers found in the grammar file (i.e. TERMINATOR, OPERATION, EXPR, PRINT, STRING, GETVALUE etc) is a token that is emitted by the Lexer when parsing plain text. These tokens are "terminators" i.e. they are the end points of the grammar, the basic building blocks on which we construct the rules.

In the next part, I will walk through the lexical analysis (which is the first stage of parsing) that is required to generate these tokens.

Filed under:

Tutorials and HOWTOs by

Hari

Posted on Sat, Apr 4, 2020 at 22:16 IST (last updated: Sun, Apr 5, 2020 @ 12:24 IST)

I have always been interested in pattern matching and parsing, and recently have re-kindled my interest in Java. So it is only natural that I came across ANTLR. ANTLR is a parser-generator that works with multiple languages and originally written to generate Java code. It certainly caught my interest, since it not only combines a lexer with a parser generator, it also builds a parse-tree object, ready for you to feed into further processing / compiling / code generation. You can certainly using the lex/yacc, flex/bison combination, but ANTLR certainly seems much more convenient. For the theoritically minded, ANTLR generates LL(k) parsers.

In this article, I will discuss how to set up ANTLR with NetBeans and Maven and how to build a simple toy calculator language with this wonderful tool. While pattern matching and parsing are complex subjects, ANTLR certainly does a lot of the heavy lifting and helps you focus purely on the grammar of the language you are designing and using the results of parsing that grammar.

Why this article (series)

This article is basically to document my own effort at learning ANTLR and also give an idea to others as to how to build a basic simple "language" with this tool. I found many tutorials / Stack Overflow answers etc, both advanced and simpe, and have tried to distill their essence into this.

Defining our scope for the First Cut

The grammar I am about to design is very simple, yet involved enough to get an idea of what it is about, and it should be easy enough to extend. Since this is as much a learning experience for me as for the reader, I appreciate corrections/comments if I have made any mistakes or errors.

I assume that you have a basic knowledge of pattern matching (or regular expressions) and a working knowledge of Java.

Before going into the technicalities, it is good to define our scope. Most of the tutorials I read dived straight into the code, which makes it somewhat hard to understand what the author is trying to achieve. Avoiding that temptation, before writing a single line of code/grammar construct, I wanted to explain the scope which makes both the features and limitations of our end result clear. So, here is what I intend to achieve in the first cut of our grammar of ToyCalc:

Conceptually:

- It is a basic calculator which stores exactly one value (float/double) in memory. All operations are carried out on that value. The following operations would be supported: Setting (or resetting) the value, and basic operations like adding, subtracting, multiplying, dividing. If no value is set initially, our calculator will assume 0.

- Our calculator language will support statements to print any textual messages (very basic string without escape sequences) also a statement to display the current value.

- Our calculator will execute all statements from top to bottom immediately and unconditionally. Hence in our first cut, we will not build any "Syntax Tree" or construct. This is so that I can concentrate on designing the "grammar" part.

- What we don't have: conditionals, loops, variables etc.

Grammatically:

- Our program will consist of one or more statements.

- Each statement will be either of the following, ie:

SETVALUE <num> - for setting or resetting the current value.GETVALUE - print the current value.ADD <num> - add <num> to the current value and store it to the current value.SUB <num> - similarly subtract from current value.MUL <num> - multiplyDIV <num> - dividePRINT <string> - print a simple text message.- Comments will start with

/* and end with */ and everything inside the comments will be ignored.

- Number can be either positive or negative, integer or a decimal. Number will not support octal, hexadecimal or e-notation representation.

- All whitespaces except the significant whitespace separating the above tokens will be ignored, except within the string literal between

" and ". We can also choose a different string delimiter character. But our string literal will not support any escape sequences.

- Each statement will be terminated by a semicolon.

- For simplicity sake, above keywords will be case-sensitive i.e you cannot use

setValue or setvalue in the place of SETVALUE. Or you can make it lower case if you wish - it is a matter of taste. Case insensitivity for keywords will be introduced in the second cut as an option.

A sample program (of our first cut ToyCalc) will look like this:

/* A sample ToyCalc Script

hello world. */

SETVALUE -110;

/* Note that since we

use statement separator ; we can have

multiple statements in one line */

MUL 5.5; ADD 10; DIV 3;

PRINT "The current value is: ";

GETVALUE;

DIV 48.32;

PRINT "The final value is: ";

GETVALUE;

So without further, ado, let us dive into the requirements.

You need:

- NetBeans - which can be downloaded from here. I am using Netbeans 11.0 LTS.

- a Java JDK - OpenJDK if you are using Linux, you can probably use your distro's package management tool to get it. On Windows you can just get the latest Oracle JDK from the Java website here and install it (or if you are worried about Oracle's licensing, you can still use OpenJDK on Windows).

That's about it. Other dependencies can be pulled in using Maven directly in Netbeans to build your project.

First steps

- Fire up Netbeans.

- Under the

Tools menu, go to Java Platforms. Add the correct path to your JDK.

- Choose

File -> New Project...

- Under the category,

Java with Maven, choose Java Application.

- I named my Application

ToyCalc giving the group ID org.harishankar. You can give whatever name you want. NetBeans will automatically create the package org.harishankar.toycalc for this application.

You should now have an empty Maven project.

Will be continued in Part 2.

Filed under:

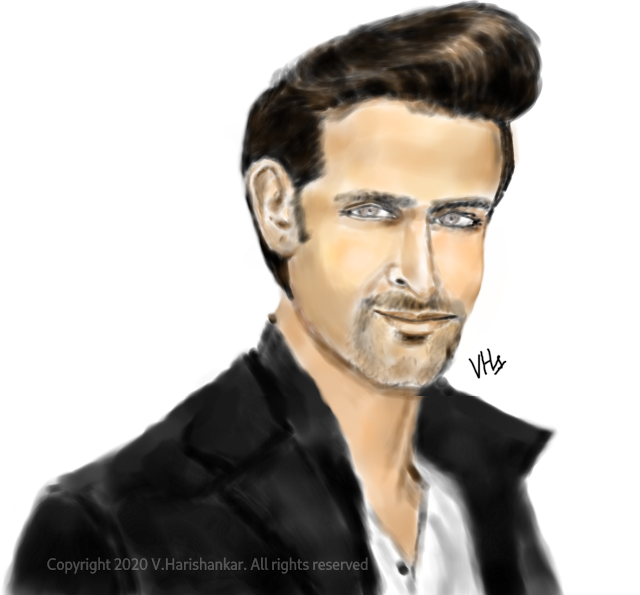

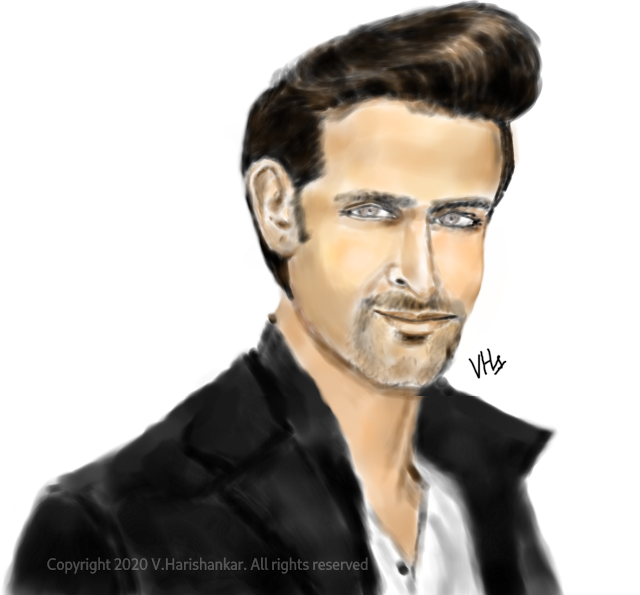

Artwork/Portraits/Caricatures by

Hari

Posted on Mon, Mar 23, 2020 at 11:22 IST (last updated: Mon, Mar 23, 2020 @ 11:22 IST)

Portrait/caricature of Hrithik Roshan. Painted using Krita with my iBall pen tablet. Yes, took out and dusted my iBall pen tablet after a long time.

Filed under:

Artwork/Portraits/Caricatures by

Hari

Posted on Sat, Mar 21, 2020 at 20:32 IST (last updated: Sat, Mar 21, 2020 @ 20:32 IST)

A portrait / caricature after an extended break - Amit Shah, BJP leader and present Home Minister of India.

Filed under:

Software and Technology by

Hari

Posted on Tue, Feb 11, 2020 at 19:34 IST (last updated: Tue, Feb 11, 2020 @ 20:33 IST)

After a lot of contemplation and analysis I recently bought an Apple iPad to avoid carrying around a heavy laptop all over the place. I did consider Android initially but all the good options (read Samsung Galaxy tabs) are priced almost as much or more than the basic iPad model (which works fine for me for basic reading, email and even typing short documents with the on-screen keyboard). I didn't want to risk my money on a basic Android tablet from a lesser known brand and I knew I couldn't go wrong with the iPad (since I already use the iPhone 5s I am quite comfortable and familiar with Apple's ecosystem). The latest iPad also features the iPadOS which brings some features that make it more laptop-like, particularly the file manager.

I know that I am not supposed to be enamored by the closed source, walled garden of Apple's universe but it really delivers a smooth and polished user experience which is hard to argue against. Though I will never get weaned away from Linux and will continue using my laptop for most of the heavy duty productivity work, I find the iPad incredibly convenient for basic productivity with mobility. From the few days of usage I am finding the experience great so far but I will report back after extended usage particularly with Office apps such as Collabora Office (LibreOffice port for iOS) and Apple's own Pages.

Pages:

1

2

3

4

5

6

7

8

9

10

...

140